[The Monthly Mean] July/August 2012 -- How to write a discussion section

The Monthly Mean is a newsletter with articles about Statistics with occasional forays into research ethics and evidence based medicine. I try to keep the articles non-technical, as far as that is possible in Statistics. The newsletter also includes links to interesting articles and websites. There is a very bad joke in every newsletter as well as a bit of personal news about me and my family.

Welcome to the Monthly Mean newsletter for July/August 2012. If you are having trouble reading this newsletter in your email system, please go to www.pmean.com/news/201207.html. If you are not yet subscribed to this newsletter, you can sign on at www.pmean.com/news. If you no longer wish to receive this newsletter, there is a link to unsubscribe at the bottom of this email. Here's a list of topics.

--> How to write a discussion section

--> Setting up a data archive

--> Using statistics to justify your belief in God

--> Monthly Mean Article (peer reviewed): Learning from Failure - Rationale and Design for a Study about Discontinuation of Randomized Trials (DISCO study)

--> Monthly Mean Article (popular press): Census surveys: Information that we need

--> Monthly Mean Book: How We Know What Isn't So: The Fallibility of Human Reason in Everyday Life

--> Monthly Mean Definition: What are the inner and outer fences in a boxplot?

--> Monthly Mean Quote: Statistics are for losers.

--> Monthly Mean Video: Why we (usually) don't have to worry about multiple comparisons

--> Monthly Mean Website: How to be the perfect research student, part 1: process matters

--> Nick News: Nick goes crazy fishing

--> Very bad joke: The probability of someone watching you...

--> Tell me what you think.

--> Join me on Facebook, LinkedIn and Twitter

--> Permission to re-use any of the material in this newsletter

--> How to write a discussion section. I am sharing an early draft of a chapter of a book I am working on. The book talks about what steps you should take in a research project if you feel like you're "stuck" and you're not making any progress. This chapter is guidance if you are having difficulty writing the discussion section of a research paper. I'd love feedback from any or all of you.

The discussion section of a research paper is typically the last part of the paper that you will write, because it draws on information from the literature review, the methods section, and the results section. It is not a rehash of any of these sections, but rather a synthesis.

The discussion section is your chance to answer the "so what" question about your work. You need to place your work in the context of previous research and discuss the persuasiveness of your findings. Don't be shy here. If your research is a lot better than any previous work, tell your readers this. Likewise, tell your readers if you've filled an important knowledge gap or if you've produced novel insights. In other words, brag a little bit.

I'm holding my breath when I tell you to brag. If there's a fault with discussion sections, it is when writers grossly overstate the significance of their research. There is no shame in conducting and reporting a "weak" research study. It only becomes a sin if you pretend that a weak study is more definitive than it deserves to be.

But you're not one of these types. The writers who overstate things never get stuck. You're stuck because you're too tentative. Adopting a cautious "yes, but" tone has filled your heart with gloom and weighed you down. It is better to start off brashly so as to get something down on paper. You can always tone it down later.

Also keep in mind that most of your readers will know less about your research area than you do. If you leave the true significance of your research unstated out of a sense of false modesty, your readers will likely miss your point.

The discussion section is also your chance to be a little bit "bossy." If your research supports the need for changes in clinical practice, tell us what you think those changes should be. If it supports a change in regulations or laws, tell us that also. Tell us the future research directions that your current findings might suggest.

The discussion section is also the section for speculation. Not wild unsupported speculation, of course, but reasonable extrapolations beyond the strict bounds of your data. If your data calls into question a proposed mechanism of action, for example, you can suggest an alternative mechanism. If your proposed intervention did not work as intended, you can speculate on what changes in your intervention might have led to a different outcome.

If you are stuck on writing a discussion section, here are some steps you can take to get going again.

1. Compare/contrast your results to previous results.

2. List the strengths and limitations of your study.

3. Advocate changes (or support the status quo)

4. Suggest areas for further research.

Compare/contrast your results to previous results.

You've already written your literature review. Hey, you wrote it back as part of the initial grant application and IRB submission. Dust it off and look at it in light of your results. Your results either confirm or contradict previous research.

If your work confirms previous research, writing is easy. State that your results are consistent with previous work. There's not much more to say, but you can relax because you are not stepping on the toes of previous researchers (one of whom might be a referee for your paper). Start up next with a new paragraph explaining how your research extends previous findings (see below).

If your work contradicts previous research, writing is easy. State that your results are inconsistent with previous work. You now have a lot to write about. Explain why you think your results are different.

Yes, yes, I know that everyone else was wrong and your experiment is the only one to draw the right conclusion. It's okay to say this, but anticipate what objections might be raised by the people who conducted the earlier studies. As noted above, one of these researchers might be a peer-reviewer, and it is better for you to mention their objection than for them to point it out to you in their report.

Dismiss these alternative explanations if they are not valid, but mention them explicitly and explain why they are invalid. Your reviewer may still accuse you of not understanding things as well as they do, but at least they won't believe that you are so naive that you can't even envision the alternate explanation.

This last point is critical. You are allowed to be bold in your assertions in the discussion section, but you also need to show respect for competing viewpoints. There's too much acrimony in research circles, and much of it gets started when people ignore or trash competing perspectives.

It may be worthwhile to bring in some of the later discussion about strengths and weaknesses and place it here instead, if you think that a weakness of previous research (or, God forbid, a weakness of your current research) is a possible explanation for the disparate findings.

If your work contradicts half of the previous research and supports the other half, your job is easy. Your research has helped resolve a burning controversy. Think about differences between your half and the other half and speculate on why these differences might produce discrepant results.

Also talk about how you research extends previous findings. Do you know more because of your data that you didn't know before? Did you replicate previous findings, but in a different population? Did it show similar long term trends as demonstrated in previous short term studies?

List the strengths and limitations of your study.

If you haven't already discussed it as part of the comparison of your work to previous research, write a few paragraphs now. Start by going back to the discussions you had with the other researchers on your team. Unless you had unlimited resources, you probably made some compromises in your research plan. Try to remember these discussions and document those things that you wish you had done, but couldn't.

Some weaknesses appear during the conduct of the research itself. Think about those times when you slapped your forehead and thought how stupid you were not to prepare for THIS. Your intervention was so complicated that most of the doctors messed it up. You let some patients into your study who clearly met all of your inclusion criteria, but they still were lousy candidates for your intervention. You failed to record some vital information, and it's too late to go back and get it now.

Don't apologize for a weakness, but don't cover it up either. It is what it is. You might be worried that it will hurt your chances for publication to be honest here. Please don't worry. You are more likely to get rejected for failure to note a critical weakness, because then you are perceived as conducting bad research AND being so naive as to not know how bad it is.

It's like the lesson my piano teacher gave me--if you're going to make a mistake, make a loud mistake. Most beginners like me play weakly and tentatively. Better to be bold and make mistakes than to play with a cowering fear of mistakes.

So in your paper state as bluntly as you can what your limitations are. Remember these for later when you talk about future directions. Some of your future directions will be to replicate your current study, but using a better research plan that addresses some of your current limitations.

Advocate changes (or support the status quo)

When you made the decision to start a research project, you had a reason. I'll bet you a thousand dollars, it was because something bugged you. Am I right? In my experience, you start a research project, because everybody is doing something very bad, and only you and a few other people realize that it's bad. It bugs you, and you want to collect the data needed to show that they need to change and stop doing that bad thing. Or maybe, you start a research project because only you and a few other people are doing something good. It bugs you, and you want to collect the data needed to show everyone else that they need to change and start doing what you are doing.

What? I'm wrong? Send me an address so I can mail you that thousand dollars. I'll still come out ahead on average. The desire for change is at the heart of most research studies. Sure, you might be doing this research as a degree requirement or to get a promotion. But look past that for now. What was it that you thought needed changing back when you started the research?

Now I'm not telling you that your research results support the change that you envisioned when you started your project. Maybe the research is an intermediate step in a series of projects that will eventually lead to an important change. That's good news. Write in your discussion section that this study by itself is insufficient justification for changing things, because additional research is needed first. Then write about the additional research (see below).

If you've conducted a definitive positive study, you've got it easy. You had a hunch and the data proved you out. Congratulations! You've earned the right to be bossy. Inform us lesser mortals of the wisdom that you had at the start of the study and which now has solid data to support it.

Maybe the research is a definitive study, but a definitive negative study. A definitive negative study means that (1) you accepted the null hypothesis, (2) your confidence interval was narrow enough to rule out the possibility of anything except a clinically trivial change, and (3) there were no glaring weaknesses in your study.

Yes, I know this is painful for you. But you knew this was a possibility when you started your study. Be proud that your sample size was large enough and your research design was rigorous enough to draw a definitive conclusion, albeit a conclusion you don't like. In theory, you are a disinterested researcher who is equally happy with any result, so try to pretend. Your research findings support the status quo and close the door on any more research in this area. Well, not ANY research, but your definitive negative finding does mean that new work has to be substantially different.

Suggest areas for further research

Now for the fun part. Tell everyone what you want to do next. Start to establish an entire research program. Dream big and pretend that you have unlimited resources. Who knows, maybe Bill Gates will be reading your article.

Now maybe you're sick of the whole research arena and want to move on to other things. Still take some time to highlight what you think others should be doing. Take some responsibility here for providing guidance to anyone else assuming your burden.

If you want to do more (and I hope you do), start thinking about selling. Here's your chance to find some collaborators and to make an initial pitch for a big new research grant. Try your best to be persuasive here. You want others to fall in love with research in this area, so they can conduct and publish extensions to your work.

The type of further research will be guided largely by your findings in this study. If you have definitive results, either positive or negative, then any new research will need substantial changes to the intervention, the patient population, or the outcome measure. If your research results are more tentative, then suggest a closer replication with only a few enhancements to your existing study.

The fly in the ointment: ambiguous results

If your results are ambiguous, writing the discussion section is very tricky. Ambiguous results occur because your confidence interval is so wide that it allows for multiple interpretations of the data. They also occur when a weak research design leaves open the possibility for more than one explanation of the research results.

This is a situation where you might need some outside help. You should get advice on how to "spin" your results. You should be bold here and promote the perspective that you really hold. Avoid a mealy-mouthed explanation that it might be THIS or it might be THAT.

But even though you promote your perspective, you do so guardedly. Talk to someone who is familiar with the area and see how they would describe your results.

Make sure that you acknowledge the alternative interpretations and do so respectfully. Present these alternatives neutrally and with an open mind. Please don't even think about criticizing these alternatives. You only have the right to be critical when your own results are unambiguous.

Also, think long and hard about WHY your results were ambiguous. This will help you write about what future research is needed to resolve your ambiguity.

Conclusion

Writing a discussion section is tough, but you already have some of the information that you need to get started. Compare/contrast your results to the results from your literature review. Then list the strengths and limitations of your study. Then be a little bossy by advocating changes and suggesting areas for further research.

Did you like this article? Visit http://www.pmean.com/category/WritingResearchPapers.html for related links and pages.

--> Setting up a data archive. One thing I've neglected in my career is data archiving. This is setting up the data that you used in a data analysis in such a way that makes it easy for someone else to recreate (and more importantly) extend your data analyses.

Part of setting up a data archive is simply data documentation, and that should always be a high priority. Make sure that each variable has a descriptive name, and include additional information about these variables, such as the units of measurement. This can be done in the data set itself (e.g., variable labels in SPSS) or in a separate file. Also document the levels of your categorical variables, so that you or someone else won't be scratching their heads to figure out if 1=male and 2=female or the reverse. Again, this can be done in the data set itself (value labels in SPSS), through the use of strings instead of number codes, or again in a separate file. Finally, be sure to document missing values. As important as the missing value code itself (usually -1 or 99), is an explanation for WHY these values are missing.

The process of data cleaning needs to be clearly outlined, and your archive should provide enough information so that someone could back out of an ill-advised change to the data set. When you clean your data, you should always strive for non-destructive changes to your data. If, for example, you need a new variable for race that is white versus every other category combined, then you create that as a new column rather than replace "black", "hispanic", "asian american", "native american" with "nonwhite" in the same column. In SPSS parlance, this is using "recode into a new variable" instead of "recode into the same variable".

Sometimes you will need to make changes in place and destroy the original values. When you do this, save the resulting file under a different name. This way, anyone who needs to get the original values just has to re-open the original file.

If you print and save copies of your data analysis output (either on paper or as electronic files), be sure to note which file was used in this data analysis. Ideally, you would not mix and match analyses on several different files. Many programs, such as SPSS will produce information about where the file comes from at the top of the output. Make sure this gets printed or saved.

When you create a new file (other than a temporary use file), add a log entry that describes (1) what earlier file(s) were needed to create this file and (2) how it differs from the previous file. This last point is important. If some variables change and some stay the same in a new data file, anyone looking at this from the outside has to know what stays the same. In the same log, describe in a sentence or two WHY you created this file.

The log entry can be replaced or supplemented by the program that created this new file. If you refer to a program, make sure the program is devoted only to creating a new file or files and don't mix in any data analysis.

If you have multiple data files, split them into three groups. The first group (primary data sets) is the raw data files that you were given when you started the project. Keep these safe and never change these files.

The second group (intermediate data sets) is the data files that are stepping stones. They show changes from the first group, but they are not ready for data analysis because they need additional processing (e.g., merging with other intermediate data files). You may wish to save these files, or you may wish to save the instructions for creating and manipulating these files.

The third group is the data files intended for data analysis. These files are the ones that are either immediately ready for data analysis or which require only very minor data editing (e.g., subsetting) to get ready for data analysis. Keep these files safe. If you make changes to these files after some of the output has been printed or archived, then consider using a new name so that someone trying to recreate an existing analysis won't get confused.

An exception to grouping might be a simple setting with only one or two primary data files and where the only data management involves minor non-destructive data edits. In this case, you'd dispense with the second and third groups of files.

Did you like this article? Visit http://www.pmean.com/category/DataManagement.html for related links and pages.

--> Using statistics to justify your belief in God. Someone sent me an email mentioning Pascal's Wager. I recall talking about this in a report I gave in a "History of Mathematics" class that I took in college. Blaise Pascal, a famous mathematician, scientist, and theologian in 17th century France, used Statistics (actually Decision Theory if you want to be precise) to justify why a rational person should believe in God. I find Pascal's argument unpersuasive. For the record I believe in God, but not for any statistical reason.

The essence of Pascal's wager is that there is no rational way to prove or disprove the existence of God. So you have to choose to believe in God or not believe in God in the knowledge that your choice might be wrong. If God exists and you believe in God, your rewards are infinite. If God exists and you don't believe, your penalties are also infinite. If God does not exist, you do pay a penalty if you believe. It might be having to tithe ten percent of your income, for example, or spending thousands of dollars on a pilgrimage to Mecca (I don't think Pascal was advocating the Hajj, though). The point is that all these penalties are finite. The gains in not believing in God when God does not exist are also finite (being able to eat meat on Friday, as an example).

So when you take a non-zero probability that God exists and multiply it by the infinite gain, and then subtract off the complementary probability that God does not exist times the finite loss, you have an infinite expected reward.

Although Pascal did not express this mathematically, the formula would be

--> Expected return in believing in God = p*(infinite reward) + (1-p)*(finite loss)

Likewise choosing not to believe in God leads to infinite expected losses.

Back in the 1970s, I thought this argument relied on a rather cavalier mathematical treatment of infinity. Infinity is not a number like 12 or 1/2 or pi, and if you treat it as if it were like those finite numbers, you can produce some pretty silly conclusions. In particular, the quantity zero times infinity can, depending on the context, be equal to zero, infinity, or any number in between. The quantity of infinity can't really be expressed directly, but only through the use of limits.

Suppose, for example, you were given infinite wealth in your lifetime. It sounds great, but you can't spend an infinite amount of wealth in a finite amount of time. So there is really no distinction between an infinite amount of wealth and a very large but finite amount of wealth. In terms of utility theory, the utility of an infinite reward is finite. Pascal, of course, is referring to rewards and punishments in the afterlife, which is, by most accounts, infinite. Still, you need to be very very cautious about any mathematical statement involving the quantity of infinity.

I've since adopted a different perspective on Pascal's wager. Pascal's wager relies on a false dichotomy. It presumes there are only two choices, believe in God or don't believe in God. In reality there are more than two choices, as is illustrated by the following religious joke.

A Cardinal rushes in to tell the Pope "I have good news and bad news." The Pope says "Tell me the good news first." The Cardinal says "Jesus Christ has returned to the world. He is on the telephone right now and wants to talk to you." The Pope says "This is wonderful. How could there be any bad news after this?" The Cardinal looks down and then quietly replies "The call is originating from Salt Lake City."

This perspective is also noted in the wonderful Simpsons episode where Homer Simpson abandons his church. Marge is arguing with him, but Homer points out all the problems that attending church can cause. "Suppose we've chosen the wrong god. Every time we go to church we're just making him madder and madder." The whole episode is full of witty barbs about religion, though the final message is strongly religious in tone. A nice synopsis of this episode is at

-->http://www.snpp.com/episodes/9F01.html

In reality there are an infinite number of choices that would have an infinite expected value. An infinity of choices is not a good thing. You can't order dinner from a restaurant with an infinite number of entrees because the cook will die before you're halfway through with the menu.

But if you find Pascal's argument persuasive, then let me point out that if you sell your house, your car, and all your possessions, and give all the proceeds to me, there will be an infinite reward for you in heaven. Now the probability that my statement is true may be very small, but when you consider the possibility that it might lead to an infinite reward, you'd better do what I asked of you.

You can also try for a statistical proof of the existence of God with the various prayer studies. I also find these studies unpersuasive. It's difficult to summarize all these studies, but the basic research design is pretty simple. Get a group of patients who are sick or who are about to undergo a risky medical procedure. Randomly select half of them for the intervention group and half for the control group. The researchers ask one or more volunteers to say a prayer on behalf of each of the individuals in the intervention group. The researchers do nothing with the control group.

This is such an easy research design and I've even helped out with one of them (though I'm not sure if the results ever got published). This is easily run as a double blind trial. Even more interesting is that most of these studies did not require informed consent. I disagree with that choice, by the way, but apparently no one, even the most devout of atheists, gets upset if someone prays on their behalf. So this is one of the few research designs that is able to totally bypass the problems of selection bias.

An interesting side note is that I originally suggested to the researchers in the prayer study that I was involved with that they use a one-sided test. The IRB asked that this be changed to a two-sided test. Apparently someone thought that you should entertain the possibility that God would respond to prayers for an individual by punishing that person. You might be able to argue that if someone knew that a stranger was praying for them, it might make them nervous or otherwise upset them and could potentially alter the outcome. But this was a blinded study. I still think the IRB was wrong, but these details are not worth fighting over.

Now, if the results of a prayer study are negative, does that prove that God does not exist? Of course not. If God is all powerful, then He is not required to provide statistical proof of His existence on demand. He can reveal himself or not to whoever He chooses, as in the story of Thomas in the Gospel of John. In fact, you could even argue that attempting to reveal God through a statistical study is wrong. Note Jesus's comment in Matthew 4:7 "You shall not put the Lord your God to the test."

Even if the study is positive, it would not prove that God exists. A lot of paranormal researchers have suggested that prayer works in much the same way as clairvoyance or telekineses does. If you believe that we humans have special but largely unrecognized powers that allow us to observe and influence events remotely, that bypasses the need for God in the hypothesis.

Actually, I'm glad that the flurry of interest in these prayer studies has died down. If you start testing prayer against placebo, it is a small step to testing Protestant prayer versus Catholic prayer, or trying to identify people who have a significantly better prayer cure rate than others.

There was an interesting criticism of the prayer studies that proposed that prayer was not adequately controlled. After all, you can't control how much your family and friends pray for you in addition to the intervention. Thus, the "dose" of prayer that you get is highly variable. I disagree with this argument. These prayer studies are about as well controlled as you can get. What you are studying is not prayer versus no prayer, but prayer of a stranger added on to the prayer that a patient already is getting.

It is indeed true that there is no "pure" control group in these studies. If you don't believe that, stop now and say a prayer on behalf of all those unfortunate people who were randomized to the control arms of these prayer studies. Actually, I can't remember where I saw this, but one researcher who had a negative result in the prayer study complained that the study failed because his opponents and critics had prayed for such a failure.

The important point here is that a "pure" control group never exists in ANY research study. Everyone in a research study today gets some level of medical care. Every intervention is an "add-on" to the care that is given in the control group. If it's not a problem in medical studies, why should it be a problem in prayer studies?

Another interesting criticism of the prayer studies is that this intervention has no plausible mechanism to support it. This is actually, in my opinion, a weakness of the mechanistic perspective on research: what's "plausible"? This is a highly individualistic word. There are many people who would find a relationship between prayer and health to be perfectly plausible. CAM researchers and traditional medicine researchers will also have highly varying perspectives on this word. So who decides what is plausible. Do we put it up for a vote, convene and expert panel, or just let everyone make their own choice? I'm not willing to disregardplausibility totally. In fact, I invoke plausibility regularly. Here you could argue that "plausible" is always modified by an adjective like "biological" or "scientific" so perhaps I'm being a bit unfair. Even so, I do believe that plausibility is a subjective standard by which you judge a research study and it carries all the baggage with it that any subjective standard would.

You might think that the people who run these prayer studies are doing so with good intentions, and for the most part you'd be right. But there was one interesting exception. A study in the Journal of Reproductive Medicine looked at in vitro fertilization rates in two groups, one of which got prayer. The results were quite large (50% success rate in the prayer group versus 26% success rate in the control group) and statistically significant (p=0.03). But one of the authors was later jailed for fraud. Admittedly, the fraud was unrelated to this research, but do you think that someone who used fraud in one area might have used fraud in another area as well? Bruce Flamm, a prominent critic of this study, noted that "A criminal who steals the identities of dead children to obtain bank loans and passports is not a trustworthy source of research data."

Another author was not involved with the study at all, but provided after-the-fact editorial guidance. There are expectations about the roles that authors play in research and this action might also be considered fraud. There were even questions about whether the stated affiliations of one of the third author with Columbia University was fraudulent. All of this raises interesting theological question about whether it is appropriate to use fraudulent methods to encourage more people to pray.

There's lots of interesting commentary about the in vitro prayer study:

--> http://www.improvingmedicalstatistics.com/Columbia%20Miracle%20Study1.htm

--> http://seattletimes.nwsource.com/html/nationworld/2002121132_prayer16.html

--> http://www.ebm-first.com/prayer/columbia-prayer-study-controversy.html

Another interesting study of prayer is also worth highlighting. The Christmas issue of the British Medical Journal is filled with light-hearted and humorous research studies. One year, they published a retrospective prayer study. Let me repeat that: a retrospective prayer study.

--> L Leibovici. Effects of remote, retroactive intercessory prayer on outcomes in patients with bloodstream infection: randomised controlled trial. BMJ 2001;323(7327):1450–1451. http://www.ncbi.nlm.nih.gov/pubmed/11751349.

The researchers got a file of medical records and split them into two groups. One group of charts got prayed over and the other group did not. The researchers then tallied the results and the patients in the prayer pile had better outcomes than the patients in the control pile. Keep in mind that the prayer occurred AFTER the outcome. This raises the stakes on biological plausibility to an even higher level. But if you believe in an all-powerful God, then you have to believe that God can change events that have occurred in the past. Or perhaps God controlled the randomization process.

Now the authors hold their cards very closely, but if you read the article carefully, it is an attempt to mock all of the previous prospective prayer studies that have been conducted. Do you think that remote intercessory prayer has any biological plausibility? Well then, you'd have to believe the plausibility in this study as well.

Still, some people didn't get the joke. A later article in BMJ tries to provide a mechanistic explanation for this retrospective result.

--> Brian Olshansky, Larry Dossey. Retroactive prayer: a preposterous hypothesis? BMJ. 2003;327(7429):1465–1468. http://www.ncbi.nlm.nih.gov/pubmed/14684651.

It relied on highly abstract theories in Physics that may have some value in studying how sub-atomic particles behave but which have no relevance to events at our scale. I criticized the subjectivity of biologically plausible mechanisms earlier, but even subjective standards have common sense limits. If the only mechanism that the researchers can invoke involves "Bosonic string quantum mechanics" or "Calabi-Yau space" then you can be sure that there is no plausible mechanism.

Dr. Martin Bland, a statistician far more famous than I will ever hope to be, pointed out that ethical conduct of research studies requires that when you find an intervention that works, you are obligated then to repeat the experiment by offering the proven effective treatment to the control group. So why didn't the authors pray over the control stack of medical records after they found their results?

--> http://www.bmj.com/rapid-response/2011/10/28/treat-control-group

Did you like this article? Visit http://www.pmean.com/category/HumanSideStatistics.html for related links and pages.

--> Monthly Mean Article (peer reviewed): Benjamin Kasenda, Erik B von Elm, John You, Anette Blümle, Yuki Tomonaga, Ramon Saccilotto, Alain Amstutz, Theresa Bengough, Joerg Meerpohl, Mihaela Stegert, Kari AO Tikkinen, Ignacio Neumann, Alonso Carrasco-Labra, Markus Faulhaber, Sohail Mulla, Dominik Mertz, Elie A Akl, Dirk Bassler, Jason Busse, Ignacio Ferreira-González, Francois Lamontagne, Alain Nordmann, Rachel Rosenthal, Stefan Schandelmaier, Xin Sun, Per O Vandvik, Bradley C Johnston, Martin A Walter, Bernard Burnand, Matthias Schwenkglenks, Heiner C Bucher, Gordon H Guyatt and Matthias Briel. Learning from Failure - Rationale and Design for a Study about Discontinuation of Randomized Trials (DISCO study). BMC Medical Research Methodology 2012, 12:131 doi:10.1186/1471-2288-12-131. Abstract (provisional): Background: Randomized controlled trials (RCTs) may be discontinued because of apparent harm, benefit, or futility. Other RCTs are discontinued early because of insufficient recruitment. Trial discontinuation has ethical implications, because participants consent on the premise of contributing to new medical knowledge, Research Ethics Committees (RECs) spend considerable effort reviewing study protocols, and limited resources for conducting research are wasted. Currently, little is known regarding the frequency and characteristics of discontinued RCTs. Methods: Our aims are, first, to determine the prevalence of RCT discontinuation for any reason; second, to determine whether the risk of RCT discontinuation for specific reasons differs between investigator- and industry-initiated RCTs; third, to identify risk factors for RCT discontinuation due to insufficient recruitment; fourth, to determine at what stage RCTs are discontinued; and fifth, to examine the publication history of discontinued RCTs. We are currently assembling a multicenter cohort of RCTs based on protocols approved between 2000 and 2002/3 by 6 RECs in Switzerland, Germany, and Canada. We are extracting data on RCT characteristics and planned recruitment for all included protocols. Completion and publication status is determined using information from correspondence between investigators and RECs, publications identified through literature searches, or by contacting the investigators. We will use multivariable regression models to identify risk factors for trial discontinuation due to insufficient recruitment. We aim to include over 1000 RCTs of which an anticipated 150 will have been discontinued due to insufficient recruitment. Discussion: Our study will provide insights into the prevalence and characteristics of RCTs that were discontinued. Effective recruitment strategies and the anticipation of problems are key issues in the planning and evaluation of trials by investigators, Clinical Trial Units, RECs and funding agencies. Identification and modification of barriers to successful study completion at an early stage could help to reduce the risk of trial discontinuation, save limited resources, and enable RCTs to better meet their ethical requirements. Available at http://www.biomedcentral.com/1471-2288/12/131/abstract

Did you like this article? Visit http://www.pmean.com/category/AccrualProblems.html for related links and pages.

--> Monthly Mean Article (popular press): Robert Groves. Census surveys: Information that we need. The Washington Post, July 17, 2012. Description: The director of the U.S. Census Bureau explains the value of the American Community Survey, which provides information on small geographic areas of the United States. This survey provides vital information for efficient expenditures by businesses and local governments. [Accessed on September 4, 2012]. http://www.washingtonpost.com/opinions/census-surveys-provide-information-that-we-need/2012/07/19/gJQA66wWwW_story.html.

Did you like this article? Visit http://www.pmean.com/category/SurveyDesign.html for related links and pages.

--> Monthly Mean Book: Thomas Gilovich. How We Know What Isn't So: The Fallibility of Human Reason in Everyday Life. Description: There have are lots of books on critical thinking skills. This is one of the earliest, but also one of the best. Here's a nice description from the Amazon website: "When can we trust what we believe - that "teams and players have winning streaks", that "flattery works", or that "the more people who agree, the more likely they are to be right" - and when are such beliefs suspect? Thomas Gilovich offers a guide to the fallacy of the obvious in everyday life. Illustrating his points with examples, and supporting them with the latest research findings, he documents the cognitive, social and motivational processes that distort our thoughts, beliefs, judgements and decisions. In a rapidly changing world, the biases and stereotypes that help us process an overload of complex information inevitably distort what we would like to believe is reality. Awareness of our propensity to make these systematic errors, Gilovich argues, is the first step to more effective analysis and action."

Did you like this book? Visit http://www.pmean.com/category/CriticalAppraisal.html for related links and pages.

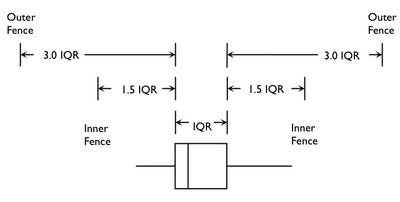

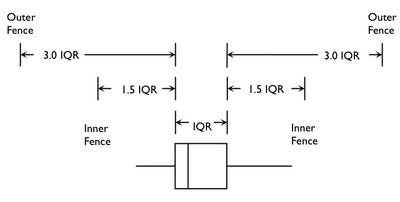

--> Monthly Mean Definition: What are the inner and outer fences in a boxplot? The boxplot was originally designed, in part, to allow easy highlighting of individual data points that are outliers. Outliers represent points outside the inner fence and extreme outliers represent points outside the outer fence. The inner and outer fences are defined using multiples of the width of the box in a boxplot, which represents the interquartile range.

Recall that the box in a boxplot extends from the 25th percentile to the 75th percentile. The width of the box, therefore, represents the interquartile range (IQR), a common alternative to the standard deviation as a measure of how spread out the data points are in a data set. Unlike the standard deviation, the IQR is largely uninfluenced by extreme data points. This is important because an outlier can inflate the standard deviation by quite a bit. If you measure an outlier by how many standard deviations it is away from the mean, you can sometimes miss outliers. Even if you don't miss the outlier, you might grossly understate how extreme it is.

This graphic image illustrates the calculation of the inner and outer fence. The width of the box is the IQR. Anything more than 1.5 IQRs below the 25th percentile or more than 1.5 IQRs about the 75th percentile is considered outside the inner fence. Anything more than 3.0 IQRs beyond either side of the box is considered beyond the outer fence.

If the 25th percentile is 60 and the 75th percentile is 64, then the interquartile range is 4. Calculate 1.5 IQRs (6) and subtract it from the 25th percentile to get 54. Add it to the 75th percentile to get 70. So anything below 54 or above 70 is outside the inner fence. Calculate 3.0 IQRs (12) and subtract from the 25th percentile to get 48. Add to the 75th percentile to get 76. Anything below 48 or above 76 is outside the outer fence.

Any outliers, points beyond the inner fence, are highlighted by the character "O" in SPSS. Any extreme outliers, any points beyond the outer fence, are highlighted by the character "*" in SPSS. By default, SPSS will identify the row number of any outliers or extreme outliers.

If there are points beyond either fence, then the "whisker" will not extend to the maximum or minimum, but rather to the furthest point that is still inside the inner fence. Most other software packages have similar behavior, though this is not uniformly consistent.

I personally dislike the use of the inner and outer fence as I believe it highlights too many data points that are really just fine. This encourages dangerous deletion of valid data values. But my perspective probably makes me an outlier among practicing statisticians, if not an extreme outlier.

Did you like this article? Visit http://www.pmean.com/category/GraphicalDisplay.html for related links and pages.

--> Monthly Mean Quote: Statistics are for losers. As I remember it, this quote is from Vince Lombardi, the 1960's coach of the Green Bay Packers, a U.S. football team. My father razzed me with this quote when he found out what I was majoring in at college. I don't actually believe that I and all you readers of this newsletter are losers. The quote is a reference to how people will use statistics to justify why they should have won. I tried to find a definitive source for this quote on the Internet, but failed, as it is attributed to a very wide number of authors.

--> Monthly Mean Video:Andrew Gelman, Jennifer Hill, Masanao Yajima. Why we (usually) don't have to worry about multiple comparisons. Description: This is a poor quality video with uneven sound levels and that alternates haphazardly between the speaker and the PowerPoint slides. Nevertheless, it carries an important message: if you use on Bayesian hierarchical models, the multiple comparisons problem often disappears. This talk was later turned into a paper which appears in the Journal of Research on Educational Effectiveness, 5: 189-211, 2012. [Accessed on September 5, 2012]. http://www.stat.columbia.edu/~martin/Workshop/statistics_neuro_data_931_speaker_04.mov.

Did you like this video? Visit http://www.pmean.com/category/MultipleComparisons.html for related links and pages.

--> Monthly Mean Website: Jean Adams, Martin White. How to be the perfect research student, part 1: process matters. Published on the Fuse open science blog, July 23, 2012. Excerpt: "Being a research student isn't always easy. But nor is supervising research students. We have spent many unproductive hours ranting about the things that research students should, but don't always, do to make their, and our, lives easier. Here they are in one easy list (in two parts...). " [Accessed on August 7, 2012]. http://fuseopenscienceblog.blogspot.co.uk/2012/07/how-to-be-perfect-research-student-part.html.

Did you like this website? Visit http://www.pmean.com/category/WritingResearchPapers.html for related links and pages.

--> Nick News: Nick goes crazy fishing. I am not a big fan of fishing, but it appears to be something that Nicholas just loves. Here are some pictures at recent fishing events, either those sponsored by his local Cub Scout Pack or fishing on his own.

Here's a big catfish that he caught.

I believe this is a large mouth bass. Nicholas has wet hair because he heard it is best to fish in the rain, and he stayed out during a rainstorm.

I retreated to the car, but that didn't mean that I got away from fishing. Here Nicholas brings me a recent catch, a green sunfish, I think.

There are many more pictures at

--> http://www.pmean.com/personal/fish.html

--> Very bad joke: "The probability of someone watching you is proportional to the stupidity of your action." Found at http://www.netfunny.com/rhf/jokes/90q4/manylines.40.html

--> Tell me what you think. How did you like this newsletter? Give me some feedback by responding to this email. Unlike most newsletters where your reply goes to the bottomless bit bucket, a reply to this newsletter goes back to my main email account. Comment on anything you like but I am especially interested in answers to the following three

questions.

--> What was the most important thing that you learned in this newsletter?

--> What was the one thing that you found confusing or difficult to follow?

--> What other topics would you like to see covered in a future newsletter?

I didn't get any feedback from the last newsletter, other than a comment about the Paris trip. I get lots of email, so if you sent me a comment and I missed it, I apologize.

--> Join me on Facebook, LinkedIn, and Twitter. I'm just getting started with social media. My Facebook page is www.facebook.com/pmean, my page on LinkedIn is www.linkedin.com/in/pmean, and my Twitter feed name is @profmean. If you'd like to be a Facebook friend, LinkedIn connection (my email is mail (at) pmean (dot) com), or tweet follower, I'd love to add you. If you have suggestions on how I could use these social media better, please let me know.

--> Permission to re-use any of the material in this newsletter. This newsletter is published under the Creative Commons Attribution 3.0 United States License, http://creativecommons.org/licenses/by/3.0/us/. You are free to re-use any of this material, as long as you acknowledge the original source. A link to or a mention of my main website, www.pmean.com, is sufficient attribution. If your re-use of my material is at a publicly accessible webpage, it would be nice to hear about that link, but this is optional.

What now?

Sign up for the Monthly Mean newsletter

Review the archive of Monthly Mean newsletters

Take a peek at an early draft of the next newsletter

![]() This work is licensed under a

Creative

Commons Attribution 3.0 United States License. This page was written by

Steve Simon and was last modified on

2010-11-01.

This work is licensed under a

Creative

Commons Attribution 3.0 United States License. This page was written by

Steve Simon and was last modified on

2010-11-01.